35 years ago, I bought a Fluke 77 multimeter (series I), which was pretty expensive by the standards of the day. I’ve used it with moderate intensity since then, changing the battery every couple of years, but not having it repaired or calibrated. I never had anything to calibrate it against, anyway.

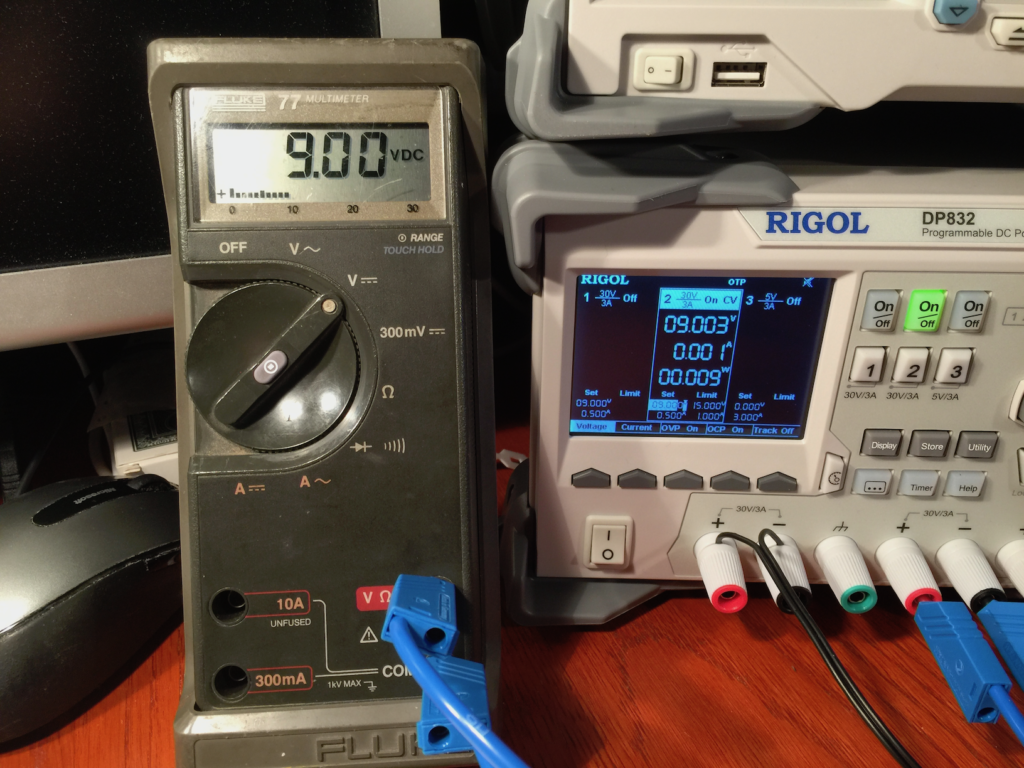

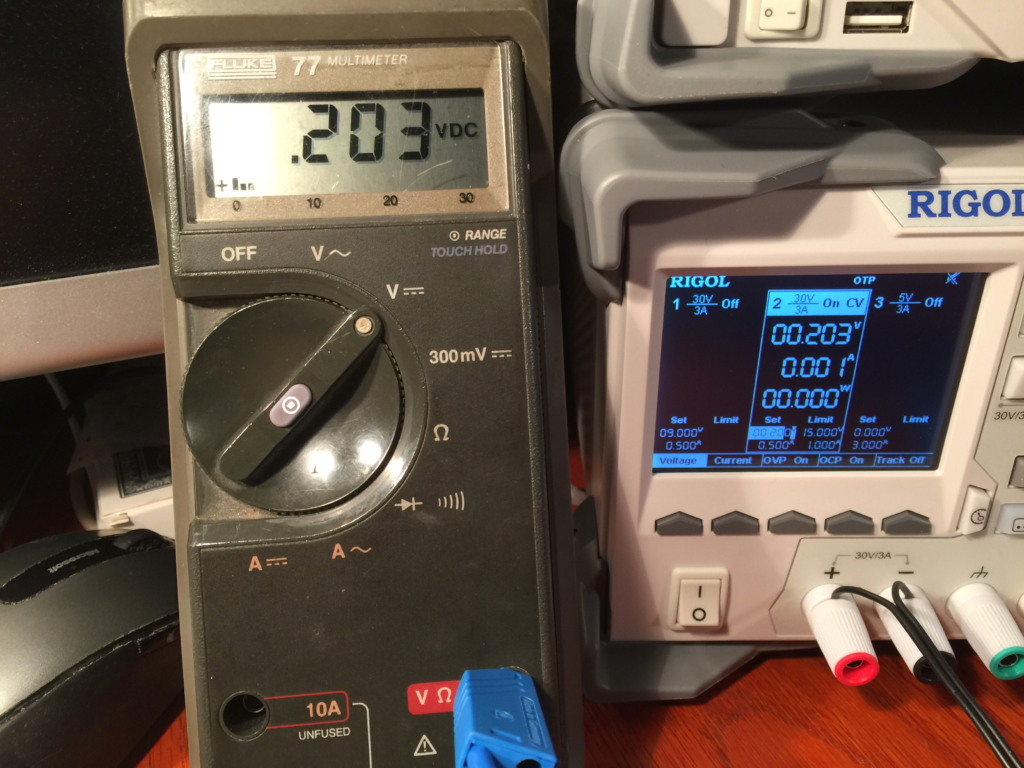

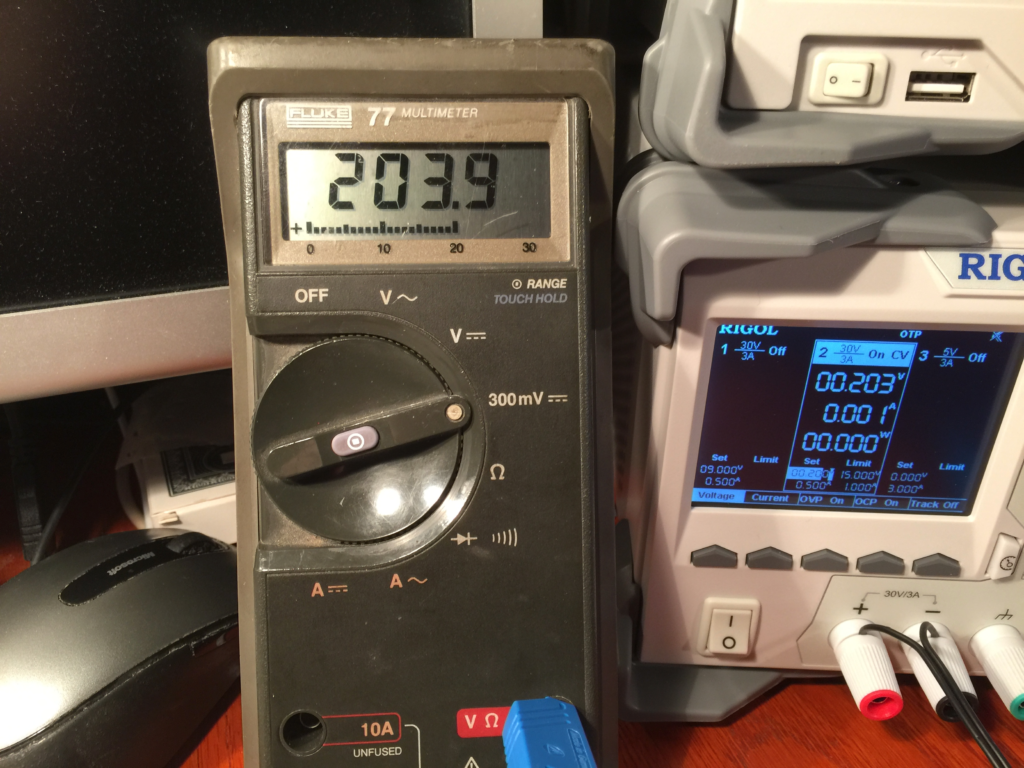

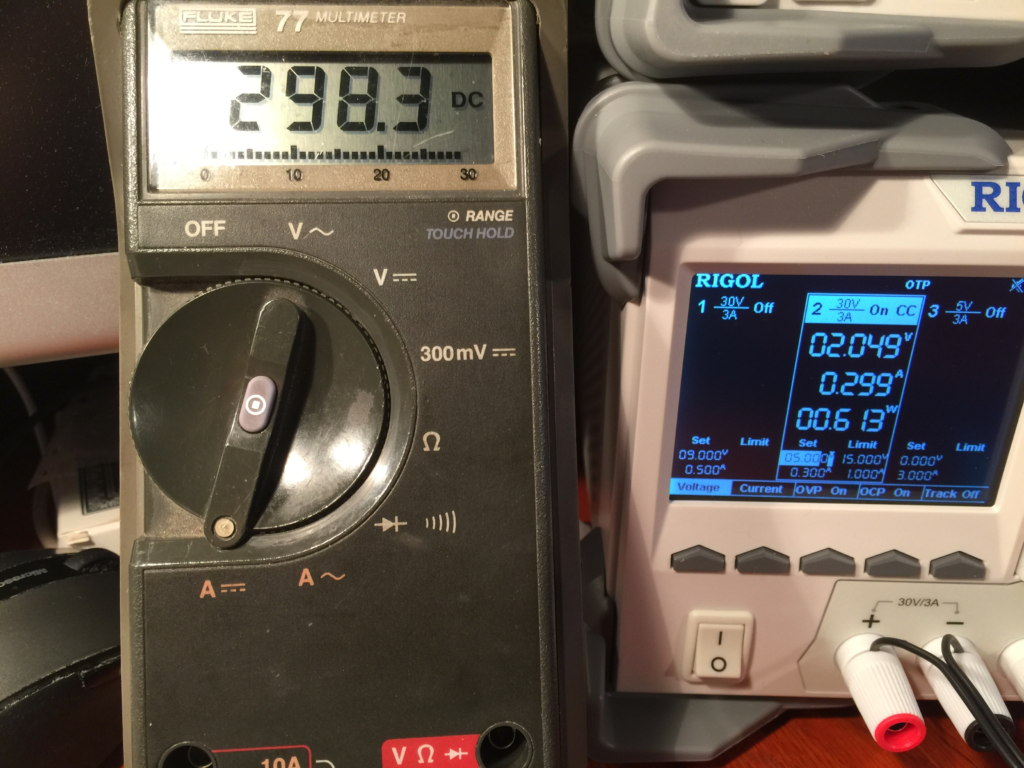

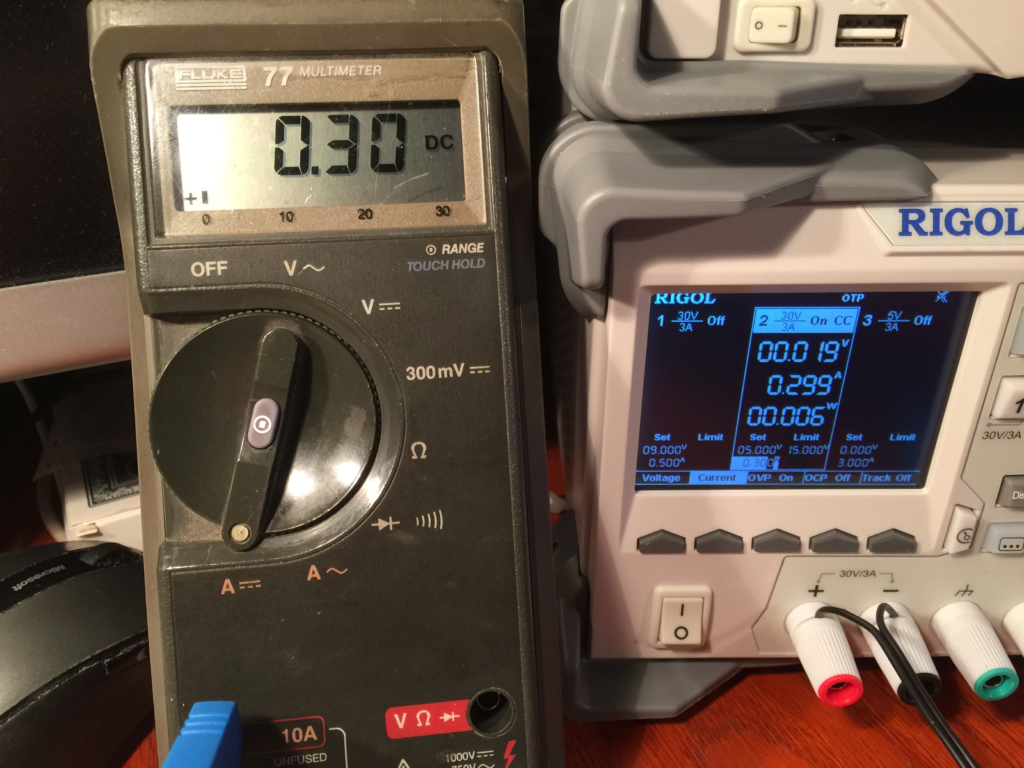

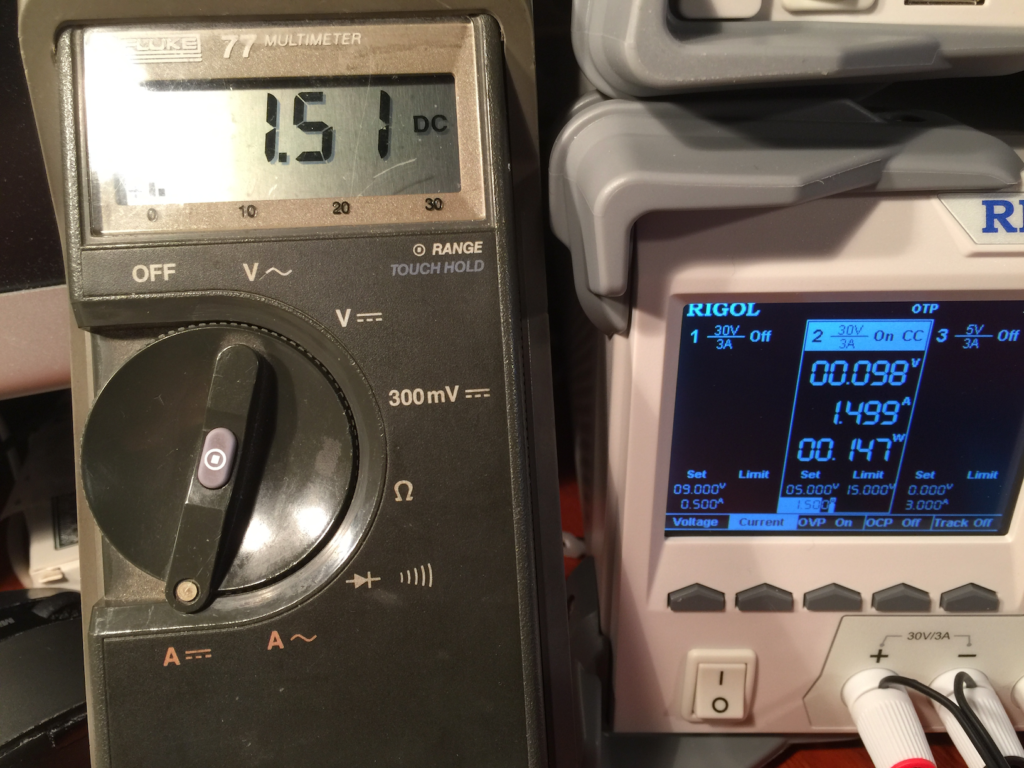

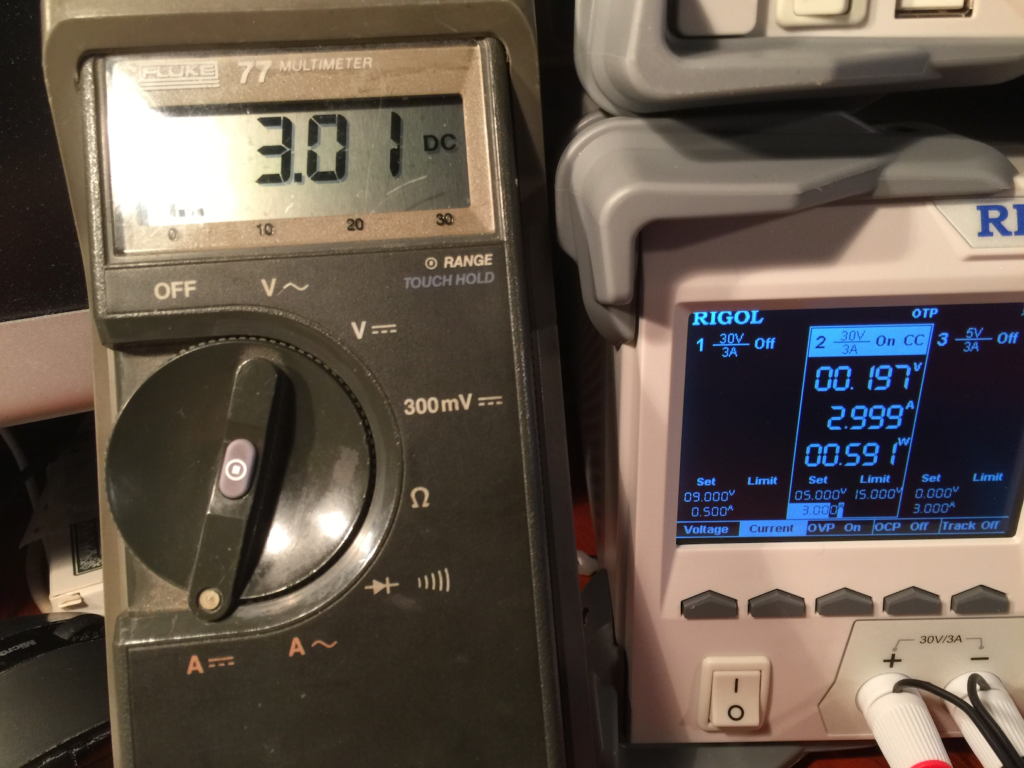

Recently, I got myself a Rigol DP832 bench power supply, which has a stated accuracy around 0.1% on all parameters, so I thought it would be interesting to see how much the Fluke differs; i.e. how much it has drifted over the last 35 years. I think the pictures speak for themselves, showing the volt, millivolt, amps, and milliamps ranges. Identical values, with a difference only in the least significant digit. I.e., rounding errors. Wow.