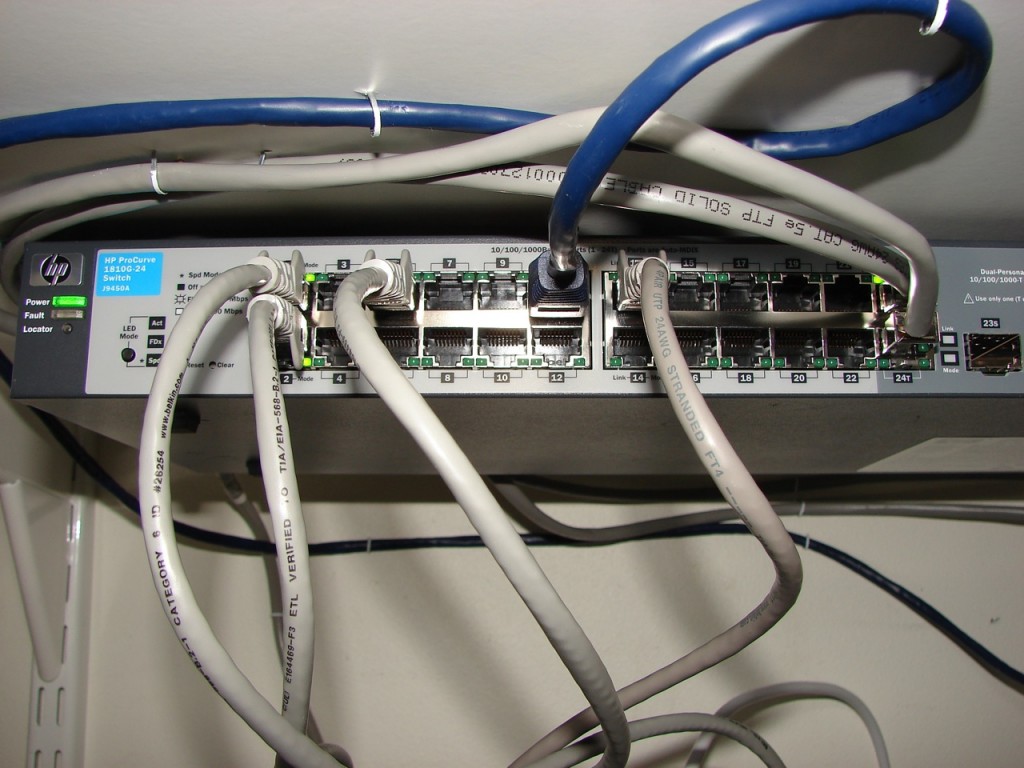

As I already bragged about, I got me one of those delicious little OSX Mini Snow Leopard Server boxes. So sweet you could kiss it. I just got everything together to make it run a subversion server through Apache, too, and as a way to document that process, I could just as well make a post out of it. Then I can find it again later for my own needs.

First of all, subversion server is already a part of the OSX Snow Leopard distribution, so there is no need to go get it anywhere. Mine seems to be version 1.6.5, according to svnadmin. Out of the box, however, apache is not enabled to connect to subversion, so that needs to be fixed.

We’ll start by editing the httpd.conf for apache to load the SVN module. You’ll find the file at:

/etc/apache2/httpd.conf

Uncomment the line:

#LoadModule dav_svn_module libexec/apache2/mod_dav_svn.so

Somewhere close to the end of the file, add the following line:

Include "/private/etc/apache2/extra/httpd-svn.conf"

Now we need to create that httpd-svn.conf file. If you don’t have the “extra” dir, make it, then open the empty file and add in:

<Location /svn>

DAV svn

SVNParentPath /usr/local/svn

AuthType Basic

AuthName "Subversion Repository"

AuthUserFile /private/etc/apache2/extra/svn-auth-file

Require valid-user

</Location>

Save and exit. Then create the password file and add the first password by:

sudo htpasswd -c svn-auth-file username

…where “username” is your username, of course. You’ll be prompted for the desired password. You can add more passwords with the same command, while dropping the -c switch.

Time to create svn folders and repository. Create /usr/local/svn. Then create your first repository by:

svnadmin create firstrep

Since apache is going to access this, the owner should be apache. Do that:

sudo chown -R www firstrep

Through Server Admin, stop and restart Web service. Check if no errors appear. Then use your fav SVN client to check if things work. Normally, you’d be able to adress your subversion repository using:

http://yourserver/svn/firstrep

Finally, don’t forget to use your SVN client to create two folders in the repository, namely “trunk” and “tags”. Your project should end up under “trunk”.

Once up and running, this repository works perfectly with Panic’s Coda, which is totally able to completely source code control an entire website. If you don’t know Coda, it’s a website editor of the text editor kind, not much fancy graphic tools, but it does help with stylesheets and stuff. It’s for the hands-on developer, you could say.

The way you manage a site in Coda is that you have a local copy of your site, typically a load of PHP files, which are version controlled against the subversion repository, then you upload the files to the production server. Coda keeps track of both the repository server and the production server for each site. The one feature that is missing is a simple way of having staged servers, that is uploading to a test server, and only once in a while copy it all up to the production server. But that can be considered a bit outside of the primary task of the Coda editor, of course.

You could say that if your site isn’t mission critical, but more of the 200 visitors a month kind, you can work directly against the production server, especially since rolling back and undoing changes is pretty slick using the Coda/subversion combo. But it does require good discipline, good nerves, and a site you don’t really, truly need for your livelihood. You can break it pretty bad and jumble up your versions, I expect. Plus, don’t forget, the database structure and contents aren’t any part of your version control if you don’t take special steps to accomplish that.

Coda doesn’t let you access all the functionality of subversion. As far as I can determine, it doesn’t have provisions for tag and branch, for instance. But it does have comparisons, rollbacks and most of the rest. The easiest way to do tagging would be through the command line. Or possibly by using a GUI SVN client, there are several for OSX. I’m just in the process of testing the SynchroSVN client. Looks pretty capable, but not all that cheap.