If geoblocking was done on Main street… hilarious.

Enemy number one

The US gov is quickly turning into corporate threat number one:

Apple has long suspected that servers it ordered from the traditional supply chain were intercepted during shipping, with additional chips and firmware added to them by unknown third parties in order to make them vulnerable to infiltration, according to a person familiar with the matter.

If this is really the case, if the US govt is tapping servers like this at any significant scale, then having Apple implementing encryption end-to-end in most of their products must mean that the govt is losing a hell of a lot more data catches than just the data they could get with a warrant.

The ability to recover data with a warrant is then just a marginal thing. The real problem is that their illegal taps stop working. Which means that the FBI case is a sham on a deeper level than it appears. The real panic is then about the server compromises failing.

And, of course, the end-to-end encryption with no keys server-side is also the solution for Apple. Implants in the servers then have relatively little impact, at least on their customers. The server-to-client communications (SSL) would be compromised, but not the content of the messages inside.

If the govt loses this battle, which I’m pretty sure they will, the next frontier must be the client devices. Not just targeted client devices, which can already be compromised in hardware and software, but we’re talking massive compromises of *all* devices. Having modifications in the chips and firmware of every device coming off the production lines. Anything less than this would mean “going dark” as seen from the pathological viewpoint of the government.

Interestingly, Apple has always tended to try to own their primary technologies, for all kinds of reasons. This is one reason more. As they’re practically the only company in a position to achieve that, to own their designs, their foundries, their assembly lines, with the right technology they could become the only trustworthy vendor of client devices in the world. No, they don’t own their foundries or assembly lines yet, but they could.

If this threat becomes real, or maybe is real already, a whole new set of technologies are needed to verify the integrity of designs, chips, boards, packaging, and software. That in itself will change the market significantly.

The opportunity of taking the high road to protect their customers against all evildoers, including their own governments, *and* finding themselves in almost a monopoly situation when it comes to privacy at the same time, is breathtaking. So breathtaking, in fact, that it would, in extremis, make a move of the whole corporation out of the US to some island somewhere not seem so farfetched at all. Almost reasonable, in fact.

Apple could become the first corporate state. They would need an army, though.

As a PS… maybe someone could calculate the cost to the USA of all this happening?

Even the briefest of cost/benefit calculations as seen from the government’s viewpoint leads one to the conclusion that the leadership of Apple is the most vulnerable target. There is now every incentive for the government to have them replaced by more government-friendly people.

I can think of: smear campaigns, “accidents”, and even buying up of a majority share in Apple through strawmen and have another board elected.

Number one, defending against smear campaigns, could partly explain the proactive “coming out” of Tim Cook.

After having come to the conclusion that the US govt has a definite interest in decapitating Apple, one has to realize this will only work if the culture of resistance to the government is limited to the very top. If eliminating Tim Cook would lead to an organisation more amenable to the wishes of the government.

From this, it’s easy to see that Apple needs to ensure that this culture of resistance, this culture of fighting for privacy, is pervasive in the organisation. Only if they can make that happen, and make it clear to outsiders that it is pervasive, only then will it become unlikely that the government will try, one way or the other, to get Tim Cook replaced.

Interestingly, only the last week, a number of important but unnamed engineers at Apple have talked to news organisations, telling them that they’d rather quit than help enforce any court orders against Apple in this dispute. This coordinated leak makes a lot more sense to me now. It’s a message that makes clear that replacing Tim Cook, or even the whole executive gang, may not get the govt what it wants, anyway.

I’m sure Apple is internally making as sure as it possibly can that the leadership cadre is all on the same page. And that the government gets to realize that before they do something stupid (again).

Protonmail

Protonmail, a secure mail system, is now up and running for public use. I’ve just opened an account and it looks just like any other webmail to the user. Assuming everything is correctly implemented as they describe, it will ensure your email contents are encrypted end-to-end. It will also make traffic analysis of metadata much more difficult. In particular, at least when they have enough users, it will be difficult for someone monitoring the external traffic to infer who is talking to whom and build social graphs from that. Not impossible, mind you, but much more difficult.

If you want to really hide who you’re talking to, use the Tor Browser to sign up and don’t enter a real “recovery email” address (it’s optional), and then never connect to Protonmail except through Tor. Not even once. Also, never tell anyone what your Protonmail address is over any communication medium that can be linked to you, never even once. Which, of course, makes it really hard to tell others how to find you. So even though Protonmail solves the key distribution problem, you now have an address distribution problem in its place.

But even if you don’t go the whole way and meticulously hide your identity through Tor, it’s still a very large step forwards in privacy.

And last, but certainly not least, it’s not a US or UK based business. It’s Swiss.

John Oliver on the Apple/FBI thing

If you for some reason missed John Oliver’s explanation of the Apple vs FBI thing, do watch it now.

Metaverse

Developing the Metaverse: Kickstarter project. Yes, I’m a little bit a part of it, and I’ve signed up, too. It would be pretty awesome if it could be done.

The FBI in full Honecker mode

Consider this:

Obama: cryptographers who don’t believe in magic ponies are “fetishists,” “absolutists”

…and even worse, this:

Let’s consider this for a bit. In particular the “going dark” idea. The idea that cryptography makes the governments of the world lose access to a kind of information they always had access to. That idea is plain wrong for the most part, since they never had access to this stuff.

Yes, some private information used to be accessible with warrants, such as contents of landline phone calls and letters in the mail, the paper kind that took forever to get through. But there never was much private information in those. We didn’t confide much in letters and phone calls.

But the major part of the information we carry around on our phones were never within reach of the government. Most of what we have there didn’t even exist back then, like huge amounts of photographs, and in particular dick pics. We didn’t do those before. Most of what we write in SMS and other messaging such as Twitter and even Facebook, was only communicated in person before. We didn’t write down stuff like that at all. Seriously, sit down and go through your phone right now, then think about how much of that you ever persisted anywhere only 10 or 15 years ago. Not much, right? It would have been completely unthinkable to have the government record all our private conversations back then, just to be able to plow through them in case they got a warrant at some future point in time.

So, what the government is trying to do under the guise of “not going dark” is shine a light where they never before had the opportunity or the right to shine a light, right into our most private conversations. The equivalence would be if they simply had put cameras and microphones into all our living spaces to monitor everything we do and say. That wasn’t acceptable then. And it shouldn’t be acceptable now, when the phone has become that private living space.

If they get to do this, there is only one space left for privacy, your thoughts. How long until the government will claim that space as well? It may very well become technically feasible in the not too distant future.

Patterns: authentication

The server generally authenticates to the client by its SSL certificate. One very popular way of doing this is to have the client trust a well known authority, a certificate authority (CA) and then verify that the name of the server’s certificate matches expectations, and that the server’s certificate is signed by the CA and not yet expired. This is an excellent method if previously unknown clients do drive-by connections to the server, but has no real value if all the clients are pre-approved for a particular service on a group of particular servers. In that case, it’s just as easy, much cheaper, and arguably more secure, to provide all the clients with the server’s public certificate ahead of time, during installation, and let them verify the server certificate against that public key.

But how do we authenticate a client to the server? Well, we provide the server with the client’s public key during installation and then verify that we have the right client on the line during connections.

We could let the client authenticate against the server using the built-in mechanisms in HTTPS, but the disadvantages of that are numerous, not least of which is getting it to work, and maintaining it over any number of operating system updates. “Fun” is not the name of that game. Another major disadvantage is that the protection and authentication that comes from the HTTPS session are ephemeral; they don’t leave a permanent record. If you want to verify after the fact that a particular connection was encrypted and verified using HTTPS, all you have to go on are textual logs that say so. You can’t really prove it.

What I’m describing now is under the precondition that you’ve set up a key pair for the server, and a key pair for the client, before time and that both parties have the other party’s public key. (Exactly how to get that done securely is the subject of a later post.)

Step 1

The client creates a block consisting of:

- A sequence number, which is one greater than the last sequence number the client has ever used.

- Current date and time

- Client identifier

- A digital signature on the above three elements together, using the client’s private key

The client sends this block to the server.

Step 2

The server (front-end) then uses the client identifier to retrieve the client’s public key, verifies the signature, and checks that the sequence number has never been used before. The server also checks that the date and time is not too far in the past or the future.

If everything checks out fine, the server records the session in the database and updates the high-water mark (last used sequence number from this client).

Step 3

The server creates a block consisting of:

- The client’s sequence number

- Current date and time

- Client identifier

- Server identifier

- A digital signature on those elements together, using the server’s private key

Benefits

Doing it this way creates a verifiable record in the database about the authentication. The signature is saved and can be verified again at any time. This allows secure non-repudiation.

Creating the authentication as a signed block also means that the client does not necessarily need to communicate directly with the server. If a client needs to deliver documents to another system, which in turns forwards them, it can also deliver the authentication block the same way. The forwarding system does not need to hold any secrets for the actual client to be able to do that. This allows us any number of intermediate message storages, even dynamically changing paths, with maintained authentication characteristics.

I should also note that doing the authentication this way decouples the mechanism from the medium. If you replace HTTPS connections by FTP, or even by media such as tape or floppy disks (remember those?), this system still works. You can’t say the same of certificate verification using HTTPS.

Patterns: sacrificial front-end

Over the years, I’ve borrowed and invented a number of design patterns for projects of all kinds. Most, if not all, were doubtlessly already invented and used, but mostly I didn’t know that then. Most of my uses of these patterns are at least 15 years ago, often 20, but I’m seeing more and more of them appear in modern frameworks and methodologies. So this is my way of saying, “I told you so”, which is vaguely satisfying. To me.

Forgive the names; I have a hard time coming up with suitable labels for them.

Blackboard architecture

The Blackboard architecture is well-known. Or should be, except it seems I always need to explain it when it comes up. It has a lot of great aspects and results in effective and extremely decoupled designs. I’ll most certainly come back to it several times.

A blackboard is a shared data source. Some processes write messages there, while other processes read them. The different processes never need to talk to each other directly or even know of each other’s existence.

From this flows a number of advantages, a few of which are:

- Different cooperating services can be based on entirely different languages and platforms.

- The interaction is usually one way (compare to Facebook’s Flux and React), greatly simplifying interactions.

- If done right, the data structures are immutable, elimination contention problems.Receiving messages

Hop, skip, and jump

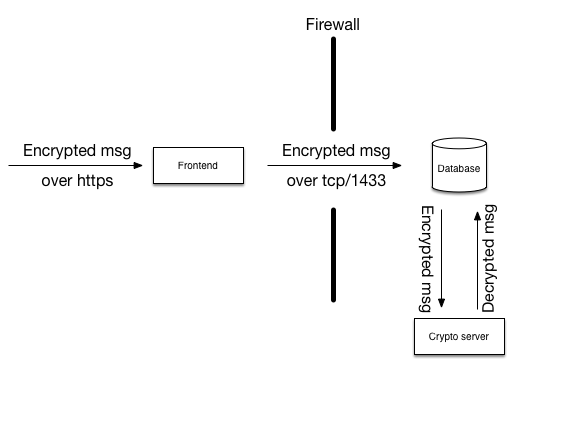

Let’s get to my first blackboard-based pattern, namely how to protect a front-end machine from compromise. In this design, the front-end machine is an Internet facing computer receiving medical documents from a number of clients around the net. The documents arrive individually encrypted using the server’s public key. The front-end machine is assumed to be hacked sooner or later and we don’t want such a hack to lead to the ability of the hacker to get at decrypted documents or other secrets.

So, what we do is we let the front-end machine take each received and encrypted message and store it in an SQL database located in its own network segment. The message ends up in a table that only holds encrypted messages, nothing else.

Another machine on that protected network segment picks up the encrypted messages from the database, decrypts them, and stores the decrypted messages in another table.

The “front-end” machine is exposed to the internet, so let’s assume it is completely compromised. In that case, the hacker has access to all the secrets that are kept on that machine and has root. This would allow the hacker to do anything on that machine that any of my programs are allowed to do.

The first role of the front-end machine is to authenticate clients that connect. We can safely assume that the hacker won’t need to authenticate anymore.

The second role of the front-end machine is to receive messages from the client. These messages are then sent on to the database through a firewall that only allows port 1433 to connect to the database. The login to the database for the front-end machine is kept on the front-end machine so the hacker can use that authentication. However, the only thing this user is permitted is access to a number of stored procedures tailored specifically to the needs of the front-end. Among these stored procedures, there are procedures to deliver messages to the database, but not much more. There is most definitely no right granted for direct table access. In other words, the hacker can deliver messages to the incoming message table, but nothing else.

Behind the firewall there is another machine that has no connection to anything except the database. That machine has access to a small number of stored procedures tailored for its use, among which are procedures to pick up new incoming messages and deliver decrypted messages back to the database.

These crypto servers first verify that the message it picks up from the database carries a valid digital signature from a registered user system, and only then does it decrypt the message with its private key. If the hacker on the front-end had delivered fake messages, these would be detected during signature verification and discarded.

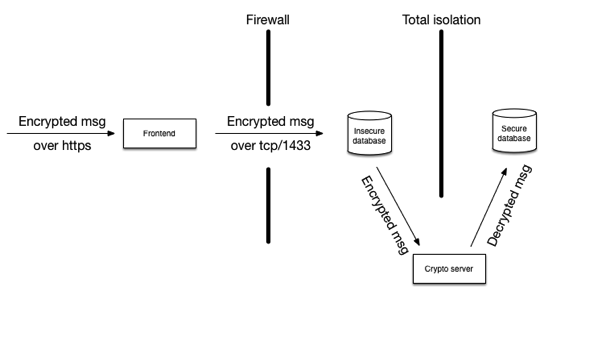

With this design, the hacker on the front-end has just a very narrow channel through which to jump from the front-end to the database, namely through the port 1433 and the SQL server software itself. But let’s assume he succeeds, somehow. If we’re really paranoid, we’d split the database into two instances on different machines, completely isolated from each other and only bridged by the crypto servers as in the next image.

To get at the plain text content of the messages, the hacker in this case, if coming from the internet at least, needs to:

- compromise the front-end

- crawl through 1433 and compromise the database (or compromise the firewall, then the database server)

- via a tailored message, compromise the crypto routines on the crypto server

- get at the secure database

The crypto server does not have any communication to the internet whatsoever, so even if it ever got compromised, it could only be controlled through messages passing through the database, and would need to exfiltrate that same way. Not impossible, but hardly easy, either. The hacker would probably choose some other way to get at the secure database.

So, what about outgoing?

The outgoing messages follow the exact opposite path. Do I really have to draw you a picture?

Privacy shield…

Completely worthless. Same pig, different name. We can’t trust the EU.

5 things you need to know about the EU-US Privacy Shield agreement | Macworld

What social networks can become

Really scary shit straight outta China. What actually stops our common social networks from becoming that same thing?