Now we’ve arrived at the last of the solutions in my list, namely “Opening the market for smaller entrepreneurs”. There are a number of reasons we have to do this, and I’ve touched on most of them before in other contexts.

The advantages of having a large all-in-one vendor to deliver a single system doing everything for your electronic health-care record needs are:

- You don’t have to worry about interconnections, there aren’t any

- You don’t have to figure out who to call when things go wrong, there’s only one vendor to call

- You can reduce your support staff, at least you may think so initially

- You can avoid all arguments about requirements from the users, there is nothing you can change anyway

- It looks like good leadership to the uninitiated, just like Napoleon probably looked pretty good at Waterloo, at least to start with

The disadvantages are:

- Since you have no escape once the decision is made, the costs are usually much higher than planned or promised

- There is only one support organization, and they are usually pretty unpleasant to deal with, and almost always powerless to do anything

- Any extra functionality you need must come from the same vendor, and will cost a fortune, and will always be late, bug-ridden, and wrong

- The system will be worst-of-breed in every individual area of functionality; its only characteristic being that it is all-encompassing (like mustard gas over Ieper)

- The system will never be based on a simple architecture or interface standards; there is no need for it, the vendor usually doesn’t have the expertise for it, and the designers have no incentives to do a quality job

- Since quality is best measured as the simplicity and orthogonality of interfaces and public specs, and large vendors don’t deliver either of these, there is no objective measure of quality, hence there is no quality (there’s a law in there somewhere about “that which is not measurable does not exist”; was it Newton who said that?)

- Due to poor architecture, the system will almost certainly be developed as too few and too large blocks of functionality, making them harder than necessary to maintain (yes, the vendor maintains it for you, but you pay and suffer the poor quality)

Everybody knows the proverb about power: it corrupts. Don’t give that kind of power to a single vendor, he is going to misuse it to his own advantage. It’s not a question of how nice and well-meaning the CEO is, it is his duty to screw you to the hilt. That’s what he’s being paid to do and if he doesn’t, he’ll lose his job.

But if we want the customers to choose best-of-breed solutions from smaller vendors, we have to be able to offer them these best-of-breed solutions in a way that makes it technically, economically, and politically feasible to purchase and install such solutions. Today, that is far from the case. Smaller vendors behave just like the big vendors, but with considerably less success, using most of their energy bickering about details and suing each other and the major vendors, when things don’t go as they please (which they never do). If all that energy went into making better products instead, we’d have amazingly great software by now.

The major problem is that even the smallest vendor would rather go bust trying to build a complete all-in-one system for electronic health-care records, than concede even a part of the whole to a competitor, however much better that competitor is when it comes to that part. And while the small vendors fight their little wars, the big ones run off with the prize. This has got to stop.

One way would be for the government to step in and mandate interfaces, modularity, and interconnection standards. And they do, except this approach doesn’t work. When government does this, they select projects on the advice of people whose livelihood depends on the creation of long-lived committees where they can sit forever producing documents of all kinds. So all you get is high cost, eternal committees, and no joy. Since no small vendor ever could afford to keep an influential presence on these committees, the work will never result in anything that is useful to the smaller vendors, while the large vendors don’t need any standards or rules of any kind anyway, since they only connect to themselves and love to blame the lack of useful standards for not being able to accomodate any other vendor’s systems. This way, standards consultants standardize, large vendors don’t care about the standards and keep selling, and everyone is happy except for the small vendors and, of course, the users who keep paying through the nose for very little in return.

There’s no way out of this for the small vendors and the users if you need standards to interoperate, but lucky for us, standards are largely useless and unnecessary even in the best of cases. All it takes is for one or two small vendors to publish de facto standards, simple and practical enough for most other vendors to pick up and use. I’ve personally seen this happen in Belgium in the 80’s and 90’s where a multitude of smaller EHR systems used each other’s lab and referral document standards, instead of waiting for official CEN standards, which didn’t work at all once published (see my previous blog post). In the US, standards are generally not invented by standards bodies, but selected from de facto standards in use, and then approved, which explains why US standards usually do work, while European standards don’t.

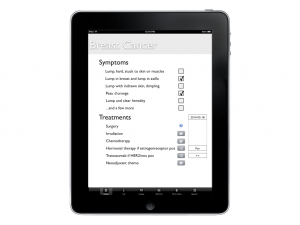

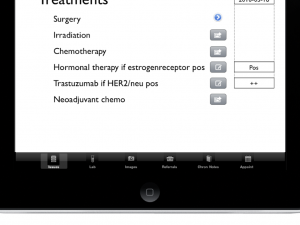

Where does all this leave us? I see only one way of getting out of this mess and that is for smaller vendors to start sharing de facto standards with each other. Which leads directly to my conclusion: everything I do with iotaMed will be open for use by others. I will define how issue templates will look and how issue worksheets and observations will be structured, but those definitions are free to use by any vendor, small or large. At the start, I reserve the right to control which documents structures and interfaces can be called “iota” and “iotaMed”, but as soon as other players take active and constructive part in all this, I fully intend to share that control. But an important reason not to let it go from the start is that I am truly afraid of a large “committee” springing up whose only interest will be to make it cost more, increase the page count, and take forever to produce results. And that, I will fight tooth and nail.

On the other hand, I’ll develop the iotaMed interface for the iPad and I intend to publish the source for that, but keep the right to sell licenses for commercial use, while non-profit use will be free. Exactly how to draw that limit needs to be defined later, but it would be a really good thing if several vendors agreed on a common set of principles, since that would make it easier for our customers to handle. A mixed license model with GPL and a regular commercial license seems to be the way to go. But in the beginning, we have to share as much as possible, so we can create a market where we all can add products and knowledge. Without boostrapping that market, there will be no products or services to sell later.